Joint modeling of multiple experiments

Often we are interested in comparing the peptides identified and quantified between multiple experimental conditions. In this case, we want to obtain valid confidence estimates for PSMs and peptides in each experiment, such that we can make a statement such as: “we detected peptide x in sample y.” To accomplish this using Percolator, we would have two options: 1) analyze each experiment with Percolator independently—that is, run Perolator once on each experiment—or 2) learn a static model from an external training set, then apply the static model to each experiment in separate Percolator runs. The former doesn’t require any additional data, but the learned models can vary between experiments (particularly if they are small experiments) and result more missing values between them. Conversly, the latter can result in a more coehisive set of PSM or peptide detections, but requires an external dataset on which to train the model.

In mokapot we’ve implemented a third strategy: when provided with multiple experiments, we learn a joint model from all of the PSMs then assign confidence estimates for each experiment individually. We’ll explore this feature in this vignette using the PSMs assigned by Tide-search for three single-cell proteomics experiments from:

Specht, Harrison, et al. “Single-cell mass-spectrometry quantifies the emergence of macrophage heterogeneity.” bioRxiv (2019): 665307.

In what follows, we’ll analyze these experiments individually and using the joint modeling strategy, then compare their performance. We’ve performed these analyses using mokapot’s Python API within a Jupyter notebook, which is available using the download link at the top of the page. In this vignette, we assume you’re somewhat familiar with the Python API; if not, consider checking out the “First steps using mokapot in Python” vignette first.

Following along locally

To run this notebook, you’ll need to have mokapot installed and have the input files saved on your computer in the same directory. You can find these files here: scope2_FP97AA.pin, scope2_FP97AB.pin, scope2_FP97AC.pin

We can set the path to the input files here:

[1]:

pin_dir = "../../../data"

Setup our Python environment

Before we can perform the analyses, we need to import the Python packages that we’ll be using. Additionally, it’s a good idea to set the random seed for reproducibility.

[2]:

import os

import mokapot

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

# Set the random seed:

np.random.seed(1)

# Colors for plotting:

colors = ("#343131", "#24B8A0")

# Find the input files:

pin_files = [os.path.join(pin_dir, f) for f in os.listdir(pin_dir)

if f.startswith("scope2_FP97A") and f.endswith(".pin")]

pin_files

[2]:

['../../../data/scope2_FP97AA.pin',

'../../../data/scope2_FP97AB.pin',

'../../../data/scope2_FP97AC.pin']

If we want messages about the mokapot’s progress throughout the analyses, then we need to enable it using the logging module:

[3]:

import logging

# True enables messages and nicely formats them:

log = False

if log:

logging.basicConfig(

level=logging.INFO,

format="%(levelname)s: %(message)s",

)

Analyze the experiments individually

We’ll start by analyzing each of the three experiments individually with mokapot:

[4]:

# A dictionary to store the results:

sep_results = {}

# Loop through the input files, analyzing each with mokapot:

for pin in pin_files:

# Read PSMs and run mokapot

print(f"Analyzing {pin}")

psms = mokapot.read_pin(pin)

results, models = mokapot.brew(psms)

# Add results to our result dictionary:

rep = os.path.split(pin)[-1].replace(".pin", "")

sep_results[rep] = results

Analyzing ../../../data/scope2_FP97AA.pin

WARNING:root:Learned model did not improve over the best feature. Now scoring by the best feature for each collection of PSMs.

Analyzing ../../../data/scope2_FP97AB.pin

Analyzing ../../../data/scope2_FP97AC.pin

Note the warning for scope3_FP97AA.pin - mokapot failed to learn a good model for the data, which is likely due to the relatively small number of PSMs in the dataset.

Now we can access the results from the dictionary:

[5]:

sep_results["scope2_FP97AA"].psms.head()

[5]:

| SpecId | Label | ScanNr | ExpMass | CalcMass | Peptide | mokapot score | mokapot q-value | mokapot PEP | Proteins | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | target_0_11040_3_-1 | True | 11040 | 2789.4179 | 2789.4084 | K.LVQDVANNTNEEAGDGTTTATVLAR.S | 23.598709 | 0.000912 | 2.917290e-19 | sp|P10809|CH60_HUMAN |

| 1 | target_0_8060_3_-1 | True | 8060 | 2154.1931 | 2154.1860 | K.GAEAANVTGPGGVPVQGSK.Y | 21.533703 | 0.000912 | 2.563543e-17 | sp|P67809|YBOX1_HUMAN |

| 2 | target_0_11114_3_-1 | True | 11114 | 2618.3554 | 2617.3450 | K.QTTVSNSQQAYQEAFEISK.K | 20.623930 | 0.000912 | 1.841793e-16 | sp|P31946|1433B_HUMAN |

| 3 | target_0_12043_3_-1 | True | 12043 | 2502.2946 | 2502.2815 | K.GVVPLAGTN[0.98]GETTTQGLDGLSER.C | 19.626017 | 0.000912 | 1.601810e-15 | sp|P04075|ALDOA_HUMAN |

| 4 | target_0_10221_3_-1 | True | 10221 | 2424.1862 | 2424.1812 | K.EQQEAIEHIDEVQNEIDR.L | 18.178486 | 0.000912 | 3.691655e-14 | sp|P0DME0|SETLP_HUMAN\tsp|Q01105|SET_HUMAN |

Analyze the experiments with a joint model

Similarly, we’ll use the joint modeling strategy to analyze these experiments. Instead of supplying one collection of PSMs (a LinearPsmDataset) to the brew() function, we supply a list of them:

[6]:

# A dictionary to store the results:

joint_results = {}

# Read each input file:

psms_list = [mokapot.read_pin(f) for f in pin_files]

# Run mokapot on all of the files:

results, brew = mokapot.brew(psms_list)

# Add results to our result dictionary:

for pin, result in zip(pin_files, results):

rep = os.path.split(pin)[-1].replace(".pin", "")

joint_results[rep] = result

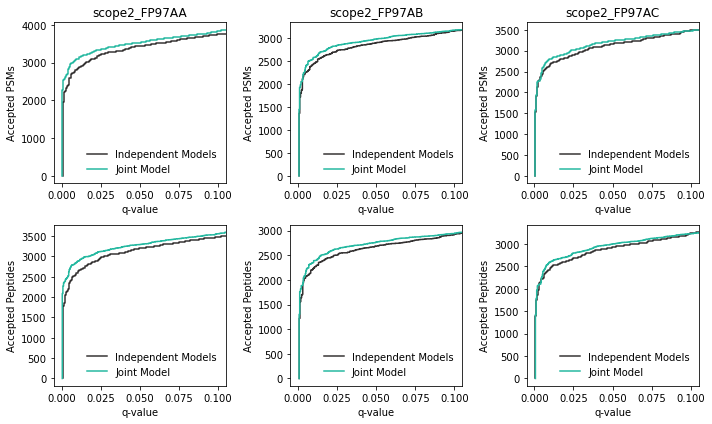

Compare Performance

Now that we’ve finished running our mokapot analyses, we’ll compare the results from both methods. First, let’s investigate if there any differences in our power to detect PSMs and peptides:

[7]:

fig, axs = plt.subplots(2, 3, figsize=(10, 6))

# Put experiments in a logical order:

experiments = list(sep_results.keys())

experiments.sort()

# Plot the perfomance of each:

gain = {}

for col, exp in zip(axs.T, experiments):

col[0].set_title(exp)

for ax, level in zip(col, ["psms", "peptides"]):

# Plot the number of PSMs accepted at each FDR threshold

sep_results[exp].plot_qvalues(level=level, ax=ax, c=colors[0],

label="Independent Models")

joint_results[exp].plot_qvalues(level=level, ax=ax, c=colors[1],

label="Joint Model")

ax.legend(frameon=False)

plt.tight_layout()

plt.show()

For these experiments, there appears to be a modest gain in power when the joint modeling strategy is used. This result was expected, because this is a subset of experiments that benefited from using a static model with Percolator.

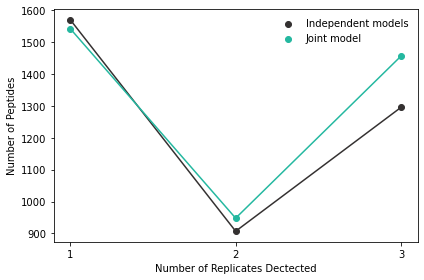

Let’s also take a look at the detected peptides that are shared across the three experiments:

[8]:

def count_reps(res_dict, fdr=0.01):

"""

Count the number of replicates in which each peptide is detected.

Parameters

----------

res_dict : Dict

A result dictionary from above.

fdr : float

The FDR threshold below which to accept peptides.

Returns

-------

pandas.DataFrame

A DataFrame with the counts for the number of peptides

detected in a number of replicates.

"""

peps = {k: set(p.peptides["Peptide"][p.peptides["mokapot q-value"] <= 0.01])

for k, p in res_dict.items()}

all_peps = peps[list(peps.keys())[0]].union(*peps.values())

reps = []

for peptide in all_peps:

reps.append(sum([peptide in r for r in peps.values()]))

ret = pd.Series(reps).value_counts().reset_index().sort_values("index")

ret.columns = ["num_reps", "num_peptides"]

return ret

sep_reps = count_reps(sep_results)

joint_reps = count_reps(joint_results)

plt.figure()

plt.plot(sep_reps["num_reps"].values, sep_reps["num_peptides"], c=colors[0])

plt.scatter(sep_reps["num_reps"].values, sep_reps["num_peptides"],

label="Independent models", color=colors[0])

plt.plot(joint_reps["num_reps"].values, joint_reps["num_peptides"], c=colors[1])

plt.scatter(joint_reps["num_reps"].values, joint_reps["num_peptides"],

label="Joint model", c=colors[1])

plt.xticks([1, 2, 3])

plt.xlabel("Number of Replicates Dectected")

plt.ylabel("Number of Peptides")

plt.legend(frameon=False)

plt.tight_layout()

plt.show()

Now we can see that using the joint modeling approach increases the number of PSMs that are detected in across all three experiments. In effect, this leads to fewer missing values in downstream tasks and can have quite a large impact when many experiments are analyzed together.

Wrapping up

In this vignette we demonstrated how the joint modeling approach available in mokapot can be valuable when analyzing datasets that consist of multiple experiments. It’s worth noting that this is particularly beneficial when the total number of PSMs or the number of high-quality PSMs is small, such as is the case with single-cell proteomics experiments we analyzed. For larger experiments, there is typically little to no difference between analyzing the experiments independently or with a joint model.